Dam incidents and failures can fundamentally be attributed to human factors.

The field of “human factors” considers how and why systems meet or don’t meet performance expectations, with an emphasis on understanding and prevention of incidents and failures. The systems considered in human factors work, such as dams, typically include both human and physical aspects, and are sometimes referred to as “sociotechnical” systems. To prevent future dam failures, it is essential that dam safety professionals understand both physical factors and human factors, and how they contribute to failures or safety. With respect to human factors, the following are key observations regarding past failures of dams and other systems:

• Failures are typically preceded by interactions of physical and human factors which begin years or decades prior to the failure.

• The interactions among physical and human factors are often not simple and linear. Instead, they may be complex and involve nonlinear relationships, feedback loops, causes having multiple effects, effects having multiple causes, and a lack of distinct “root causes” or dominant contributing factors.

• Interactions among physical and human factors usually generate “warning signs” which are not recognized, or not sufficiently acted upon, prior to the failure.

• Physical processes deterministically follow physical laws, with no possibility of physical “mistakes.” Therefore, failures – in the sense of human intentions not being fulfilled – are fundamentally due to human factors, as a result of human efforts individually and collectively “falling short” in various ways. A story of why a failure happened therefore cannot be complete without reference to contributing human factors.

• A natural tendency is for systems to move towards disorder and failure, in line with the concept of increasing “entropy” in physics. Therefore, systems such as dams are typically not inherently “safe,” and continual human effort is needed to maintain order and prevent failure.

• Systems such as dams, including the people involved in designing, building, operating, and managing them, tend to conservatively have numerous “barriers” which must be overcome for failures to occur. This generally makes failures unlikely and results in very low overall failure rates. However, when dealing with a large number of systems, such as the approximately 90,000 dams in the United States, it can be expected that “unlikely” failures will sometimes occur, due to physical and human factors “lining up” in an adverse way that overcomes all barriers.

With these observations in mind, the propensity towards failure can be viewed as being determined by the balance of human factors which contribute to failure (“demand”) versus those which contribute to safety (“capacity”). Thus, applying a standard engineering metaphor, failure results when human factors demand on the system exceeds capacity, and safety results when capacity exceeds demand. The human factors contributing to safety “demand,” and therefore the potential for failure, can generally be placed into three categories of primary drivers of failure:

• Pressure from non-safety goals, such as delivering water, generating power, reducing cost, increasing profit, maintaining property values, meeting schedules, protecting the environment, providing recreational benefits, building and maintaining relationships, personal goals, and political goals.

• Human fallibility and limitations associated with misperception, faulty memory, ambiguity and vagueness in use of language, incompleteness of information, lack of knowledge, lack of expertise, unreliability of intuition, inaccuracy of models, cognitive biases operating at the subconscious level and group level, use of heuristic shortcuts, emotions, and fatigue.

• Complexity resulting from multiple system components having interactions which may involve nonlinearities, feedback loops, network effects, etc. Such interactions can result in large effects from small causes, including “tipping points” when thresholds are reached, and they make complex systems difficult to model, predict, and control. Complexity generally exacerbates the effects of human fallibility and limitations.

The primary drivers of failure lead to various types of “human errors,” which can include categories such as “slips” (actions committed inadvertently), “lapses” (inadvertent inactions), and “mistakes” (intended actions with unintended outcomes, due to errors in thinking). In the context of dam safety, mistakes are the most common type of human error which contributes to failures. “Violations” are also sometimes classified as a category of human errors and involve situations in which there is deliberate non-compliance with rules and procedures, usually because the rules or procedures are viewed as unworkable in practice. In general, with all categories of human errors, judgments regarding what constitutes “error” are usually made in retrospect and are therefore subject to the pitfalls of hindsight bias, as well as fundamental attribution bias in which too much emphasis is placed on the attributes of an individual rather than situational influences. Care must therefore be taken in forensic investigations to avoid readily assigning “blame.” Instead, investigators must put themselves in the shoes of the people whose decisions and actions are being evaluated, recognizing that they faced pressures from their situational contexts, were inherently fallible and limited, and did not have the benefit of clear foresight when they made their decisions and took their actions. Human errors and the underlying primary drivers of failure noted above often lead to inadequate risk management. Inadequacies in risk management may be classified into three types:

• Ignorance involves being insufficiently aware of risks. This may be due to aspects of human fallibility and limitations such as lack of information, inaccurate information, lack of knowledge and expertise, and unreliable intuition. Complexity can also contribute to ignorance.

• Complacency involves being sufficiently aware of risks but being overly risk tolerant. This may be due to aspects of human fallibility and limitations such as fatigue, emotions, indifference, and optimism bias (“it won’t happen to me”). Pressure from non-safety goals can also contribute to complacency.

• Overconfidence involves being sufficiently aware of risks, but overestimating ability to deal with them. This may be due to aspects of human fallibility and limitations such as inherent overconfidence bias, which results in overestimating knowledge, capabilities, and performance.

Counterbalancing the drivers of failure noted above, the human factors contributing to system capacity for safety generally emanate from what is routinely referred to as “safety culture.” The general idea of safety culture is that individuals at all levels of an organization place high value on safety, which leads to a humble and vigilant attitude with respect to preventing failure. For such a safety culture to be developed and maintained in an organization, the senior leadership of the organization must visibly give priority to safety, including allocating the resources and accepting the tradeoffs needed to achieve safety. Experience in dam safety shows that strong safety cultures naturally lead to implementation of numerous “best practices” for dam safety risk management. As a corollary, dam incidents and failures are typically preceded by long-term cumulative neglect of numerous accepted best practices. These best practices can be organized into two categories: general design and construction features of dam projects, and organizational and professional practices. Best practices for general design and construction features of dam projects include the following:

• General design and construction best practices – Generally-accepted best practices for design and construction should be identified and applied.

• Design conservatism – Designs should be sufficiently conservative and provide factors of safety commensurate with uncertainties and risks. To the extent possible, designs should also preferably provide physical redundancy, robustness, and resilience, and their potential failure modes should be progressive, controllable, and generate warning signs.

• Design customization – Designs should be customized to suit features of project sites. This involves “scenario planning” during design to be ready to handle situations which may potentially be encountered during construction, testing during construction to verify that design assumptions and intent are met, and design adaptation during construction to address observed conditions.

• Budget and schedule contingencies – Provisions should be made for accommodating reasonable contingencies when establishing design and construction budgets and schedules.

Best practices for organizational and professional practices include the following:

• Resources and resilience – Sufficient budget and staffing resources should be provided, so that systems and people are not stretched to their limits, thereby increasing error and failure rates. The organization should also be resilient, in the sense of having sufficient internal diversity and adaptive capability to provide a broad and flexible repertoire of possible responses to cope with the potential challenges faced by the organization.

• Humility, learning, and expertise – Individuals and organizations should humbly recognize the limitations of their knowledge and skills, engage in continuing education and training, learn from study of past incidents and failures, and draw on expertise wherever it may be found, rather than simply deferring to authority based on position in a hierarchy.

• Cognitive diversity – Teams should have cognitively diverse membership, to bring in diversity of perspectives, education, training, experience, information, knowledge, models, skills, problem-solving methods, and heuristics. With effective team leadership, structure, and group dynamics, cognitively diverse teams can avoid problems such as “groupthink,” and can outperform more homogeneous teams of the “best” people.

• Decision-making authority – Decision-making authority should be commensurate with responsibilities and expertise, rather than this authority being contravened by organizational structure. This is particularly the case for safety personnel, who should be selected for their positions based on having relevant experience, vigilance, caution, humility, inquisitiveness, skepticism, discipline, meticulousness, communication ability, and assertiveness.

• System modeling – Appropriate system models should be developed, with a full range of potential failure modes identified, and emergency action plans developed accordingly. For actively operated systems, such as large hydropower dams, these failure modes should include operational failure modes, and it may be appropriate to explicitly account for interactions of physical and human factors in the system models. Where models are implemented through software, the software should be carefully developed, validated, and used.

• Checklists – Checklists should be used to reduce the incidence of human errors, especially for tasks which are relatively recurrent, such as inspections. Checklists should be customized for each situation, clear and unambiguous, focused on items which are important but prone to being missed, prepared at a level of detail appropriate for the time available to use the checklist, and regularly updated based on experience. Recognizing that checklists are most effective for prevention of slips, lapses, and violations, but somewhat less effective for prevention of mistakes, checklists should be used to supplement, not replace, situation-specific attentive observation and critical thinking.

• Information management – Information management should involve thorough documentation, open information sharing within and across organizations, and not being dismissive of dissenting voices. This will enable surfacing and synthesis of fragmentary information to help “connect the dots” and better understand system behavior.

• Warning signs – There should be vigilant monitoring to detect “warning signs” that a system is headed towards failure, while there is still a “window of recovery” available. This monitoring should be conducted at regular intervals, after unusual events, and also during apparent “quiet periods.” Once potential warning signs are detected, there should be prompt and appropriate investigative follow up, verification of that follow up, thorough documentation of observations and findings so that emerging patterns can be discerned and evaluated, and prompt implementation of any needed remedial actions. As a heuristic to help judge whether a potential warning sign warrants action, “simulated hindsight” can be used: fast-forward into the future, imagine that failure has occurred, and ask whether ignoring the potential warning was justifiable; if not, take the potential warning sign seriously.

• Standards – High professional, ethical, legal, and regulatory standards should be maintained, especially when lives are stake.

In summary, organizations which are capable of handling demands on safety from various drivers of failure have a strong safety culture and diligently implement numerous best practices. Such organizations are mindful, cautious, humble, oriented towards learning and improving, resiliently adaptive, and maintain high professional and ethical standards. They vigilantly search for and promptly address warning signs before problems grow too large, and they make effective use of available information, expertise, resources, and management tools to properly balance safety against other organizational goals.

References:

(1) Peck, R. (1973). “Influence of Nontechnical Factors on the Quality of Embankment Dams,” in Embankment-Dam Engineering: Casagrande Volume, Wiley, 201-208.

(3) Regan, P. (2010). “Dams as Systems – A Holistic Approach to Dam Safety,” USSD Annual Conference. Sacramento, CA: United States Society on Dams.

(4) Lord, D. (2012). “The Taum Sauk Dam Failure Was Preventable – How Do We Prevent the Next Operational Dam Failure?” USSD Annual Conference. New Orleans, LA: United States Society on Dams.

(6) de Rubertis, K. & van Donkelaar, C. (2013). “Look Both Ways,” USSD Annual Conference. Phoenix, AZ: United States Society on Dams.

(7) Bryan, C. & de Rubertis, K. (2014). “Examining the Role of Human Error in Dam Incidents and Failures,” USSD Newsletter, 164, 42-49. United States Society on Dams.

(12) Ascila, R., Baecher, G., Hartford, D., Komey, A., Patev, R. & Zielinski, P. (2015). “Systems analysis of dam safety at operating facilities,” USSD Annual Conference. Louisville, KY: United States Society on Dams.

(14) Hartford, D., Baecher, G., Zielinski, P., Patev, R., Ascila, R. & Rytters, K. (2016). Operational Safety of Dams and Reservoirs: Understanding the reliability of flow-control systems, ICE Publishing.

(15) Alvi, I., Richards, G., and Baker, M. (2016). “10th Anniversary of Ka Loko Dam Failure, Hawaii,” Presentation. ASDSO Annual Conference. Philadelphia, PA: Association of State Dam Safety Officials.

This lesson learned was peer-reviewed by Mark Baker, P.E., DamCrest Consulting.

Austin (Bayless) Dam (Pennsylvania, 1911)

Banqiao Dam (China, 1975)

Big Bay Lake Dam (Mississippi, 2004)

Buffalo Creek Dam (West Virginia, 1972)

Camará Dam (Brazil, 2004)

Cleveland Dam (British Columbia, 2020)

Columbia River Levees at Vanport (Oregon, 1948)

Edenville Dam (Michigan, 2020)

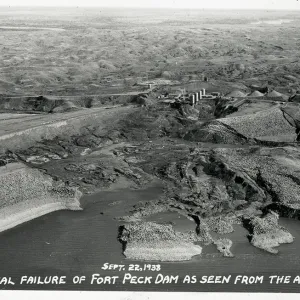

Fort Peck Dam (Montana, 1938)

Fujinuma Dam (Japan, 2011)

Ka Loko Dam (Hawaii, 2006)

Lake Delhi Dam (Iowa, 2010)

Langley Dam (South Carolina, 1886)

Laurel Run Dam (Pennsylvania, 1977)

Little Deer Creek (Utah, 1963)

Malpasset Dam (France, 1959)

Maple Grove Dam (Colorado, 1979)

Marshall Lake Dam (Colorado)

Meadow Pond Dam (New Hampshire, 1996)

New Orleans Levee System (Louisiana, 2005)

Oroville Dam (California, 2017)

Palagnedra Dam (Switzerland, 1978)

Sheffield Dam (California, 1925)

South Fork Dam (Pennsylvania, 1889)

Spencer Dam (Nebraska, 2019)

St. Francis Dam (California, 1928)

Taum Sauk Dam (Missouri, 2005)

Tempe Town Lake Dam (Arizona, 2010)

Teton Dam (Idaho, 1976)

Toddbrook Reservoir Dam (England, 2019)

Vajont Dam (Italy, 1963)

Walnut Grove Dam (Arizona, 1890)

Williamsburg Reservoir Dam (Massachusetts, 1874)

Wivenhoe Dam (Australia, 2011)

Big Dams & Bad Choices: Two Case Studies in Human Factors and Dam Failure

Failure of Sella Zerbino Secondary Dam in Molare, Italy

Human Factors in Dam Failure & Safety

Human Factors in Dam Failures

Additional Resources Not Available for Download

- Melchers, R., (1977) “Organizational Factors in the Failure of Civil Engineering Projects,” Transactions of Institution of Engineers Australia, GE 1, 48-53.

- Shaver, K. (1985) The Attribution of Blame: Causality, Responsibility, and Blameworthiness, Springer Verlag.

- Nowak, A., Ed. (1986) Modeling Human Error in Structural Design and Construction, ASCE.

- Reason, J. (1990) Human Error, Cambridge University Press.

- Senders, J. and Moray, N. (1991) Human Error: Cause, Prediction, and Reduction, Lawrence Erlbaum Associates.

- Plous, S. (1993) The Psychology of Judgment and Decision Making, McGraw-Hill.

- Sowers, G. (1993) “Human Factors in Civil and Geotechnical Engineering Failures,” ASCE Journal of Geotechnical Engineering, 119:2, 238–256.

- Rescher, N. (1995) Luck: The Brilliant Randomness of Everyday Life, Farrar Straus & Giroux.

- Dorner, D. (1997) The Logic of Failure: Recognizing and Avoiding Error in Complex Situations, Basic Books.

- Reason, J. (1997) Managing the Risks of Organizational Accidents, Ashgate Publishing Company.

- Rasmussen, J. (1997) “Risk management in a dynamic society: a modelling problem,” Safety Science, 27 (2-3), 183-213.

- Perrow, C. (1999) Normal Accidents: Living with High-Risk Technologies, Princeton University Press.

- Pidgeon, N. and O’Leary, M. (2000) “Man-made disasters: why technology and organizations (sometimes) fail,” Safety Science, 34 (1–3), 15–30.

- Patankar, M., Brown, J., Sabin, E., and Bigda-Peyton, T. (2001) Safety Culture, Ashgate Publishing Company.

- Gilovich, T., Griffin, D., and Kahneman, D., Eds. (2002), Heuristics and Biases: The Psychology of Intuitive Judgment, Cambridge University Press.

- Strauch, B. (2002) Investigating Human Error: Incidents, Accidents, and Complex Systems, Ashgate Publishing Company.

- Woods, D. and Cook, R. (2002) “Nine Steps to Move Forward from Error,” Cognition, Technology & Work, 4, 137–144.

- Hollnagel, E. (2004) Barriers and Accident Prevention, Ashgate Publishing Company.

- Dekker, S. (2005) Ten Questions about Human Error, CRC Press.

- Bea, R. (2006) “Reliability and Human Factors in Geotechnical Engineering,” ASCE Journal of Geotechnical and Geoenvironmental Engineering, 132:5, 631–643.

- Dekker, S. (2006) The Field Guide to Understanding Human Error, Ashgate Publishing Company.

- Hollnagel, E., Woods, D. and Leveson, N., Eds. (2006) Resilience Engineering: Concepts and Precepts, Ashgate Publishing Company.

- Page, S. (2008) The Difference: How the Power of Diversity Creates Better Groups, Firms, Schools, and Societies, Princeton University Press.

- Qureshi, Z. (2008) A Review of Accident Modelling Approaches for Complex Critical Sociotechnical Systems, Report No. DSTO-TR-2094, Australian Defence Science and Technology Organisation.

- Reason, J. (2008) The Human Contribution: Unsafe Acts, Accidents, and Heroic Recoveries, Ashgate Publishing Company.

- Gawande, A. (2010) The Checklist Manifesto: How to Get Things Right, Henry Holt and Company.

- Kiser, R. (2010) Beyond Right and Wrong: The Power of Effective Decision Making for Attorneys and Clients, Springer Verlag.

- Rosness, R., Grøtan, T., Guttormsen, G., Herrera, I., Steiro, T., Størseth, F., Tinmannsvik, R., and Wærø, I., (2010) “Organisational Accidents and Resilient Organisations: Six Perspectives, Revision 2,” No. Sintef A 17034, SINTEF Technology and Society, Trondheim.

- Woods, D., Dekker, S., Cook, R., Johannesen, L., and Sarter, N. (2010) Behind Human Error, 2nd Ed., Ashgate Publishing Company.

- Dekker, S. (2011) Drift into Failure: From Hunting Broken Components to Understanding Complex Systems, Ashgate Publishing Company.

- Kahneman, D. (2011) Thinking, Fast and Slow, Farrar, Straus and Giroux.

- Leveson, N. (2011) Engineering a Safer World: Systems Thinking Applied to Safety, MIT Press.

- Mitchell, M. (2011) Complexity: A Guided Tour, Oxford University Press.

- Salmon, P., Stanton, N., Lenne, M., Jenkins, D., Rafferty, L., and Walker, G. (2011) Human Factors Methods and Accident Analysis, Ashgate Publishing Company.

- Conklin, T. (2012) Pre-Accident Investigations: An Introduction to Organizational Safety, Ashgate Publishing Company.

- Hill, C. (2012) “Organizational Response to Failure,” 2012 USSD Conference Proceedings, 89-102.

- Alvi, I. (2013) “Engineers Need to Get Real, But Can't: The Role of Models,” ASCE Structures Congress 2013, 916-927.

- Catino, M. (2013) Organizational Myopia: Problems of Rationality and Foresight in Organizations, Cambridge University Press.

- Hollnagel, E. (2014) Safety-I and Safety-II: The Past and Future of Safety Management, Ashgate Publishing Company.

- Sunstein, C. and Hastie, R. (2015) Wiser: Getting Beyond Groupthink to Make Groups Smarter, Harvard Business Review Press.

- Weick, K. and Sutcliffe, K. (2015) Managing the Unexpected: Resilient Performance in an Age of Uncertainty, 3rd Ed., Jossey-Bass.